A decade ago, a college diploma practically came stapled with an offer letter. To us stumbling seniors circa mid-2010s (pre-Transformer architecture, pre-ChatGPT), there seemed to be an invisible conveyor belt between the final exam and the badge printer. If we solved enough algorithms, went to office hours, stacked a few internships, a door somewhere would swing open.

Then came the warnings:

AI Could Wipe Out 50 Percent of All Entry-level White-collar Jobs

Next the headlines:

For Some Recent Graduates, the A.I. Job Apocalypse May Already Be Here

Finally the stories:

Goodbye, $165,000 Tech Jobs. Student Coders Seek Work at Chipotle

The golden handcuffs of Banking, Consulting, and BigLaw we used to joke about (favorite Microsoft Office app: Excel, PowerPoint, or Word?) suddenly became lottery tickets to enter the chocolate factory. Breaking into Big Tech felt like musical chairs with the music turned off. Keeping a job meant staying safe until the next perf cycle. Even freakishly remarkable MIT kids were suddenly enrolling in more school so they could wait it out in purgatory. What’s the rest of us to do?

What follows is a 4-part exploration to price the risk and post the bond of AI takeoff:

Guarantees for people

Gates for machines

Scoreboard for judgment

Exchange rate for credibility

If we want trust, we need to post collateral. If we want better work, we need a better scoreboard. In this moment, both human and machine intelligence are frontrunners in the race against time. The difference is that only one of them can feel responsible.

Opportunity Area 1: Guarantees for People

Banks don’t need to be empty to fail; they just need their depositors to think tomorrow’s teller will say, “Sorry, we’re out.” Labor works the same way.

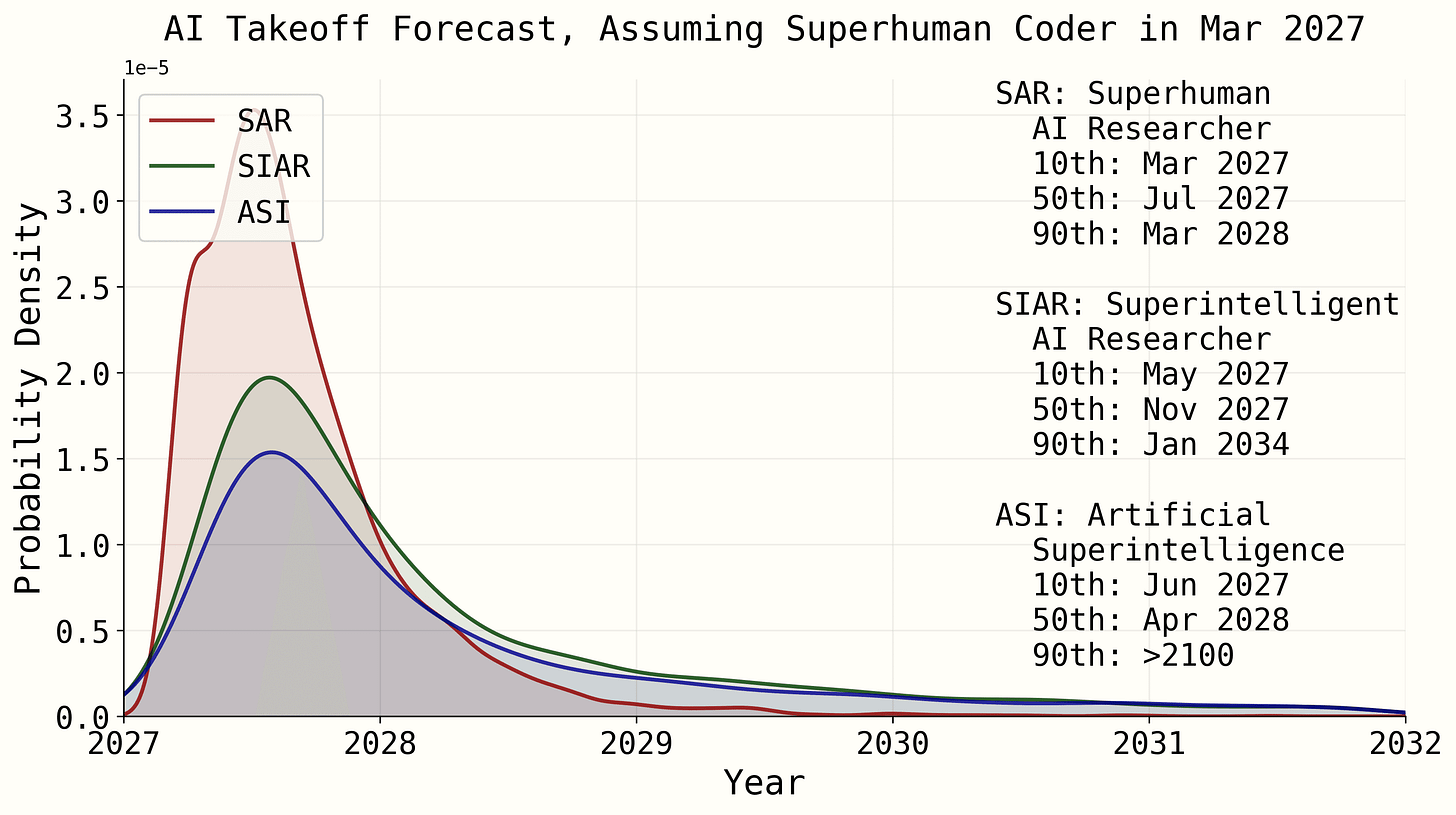

Panic sets in before pink slips do. If enough people decide there’s a time-based hatch under their desk, they won’t wait to test it out. They take their credentials, update their resumes, stop sharing knowledge, and head for the exit. Managers quietly freeze hiring. Boards ask for efficiencies. Headlines say 30% of code at Google is written by AI (see: autocomplete). Overnight, every startup codifies a KPI for it too. Suddenly, the fear that began as a rumor gets its first receipts.

It’s comfortable to pretend the AI backlash is about ethics, energy, the value of care, or the dignity of work. But for anyone who’s ever gotten close to knife’s edge before, it’s about having to choose between your mortgage, childcare, or healthcare bill when the storm hits and you’re in the middle of a roof repair.1 I’m tired of hearing: “You are not going to be replaced by AI. You will be replaced by someone who is using AI."

Maybe. But enough augmentation is replacement, and if automation outpaces growth, the org chart won’t look the same even as it grows. Just like deposit insurance, “we value our people” memos won’t stop a run unless there’s a guarantee behind them. Trust needs collateral and acts like liquidity, disappearing when we need it the most.

What I’d do as a public-company executive:

Treat fear like a balance-sheet risk, not a comms problem. Put in writing, signed by the CEO, what happens before and after new AI or automation initiative lands.

Post collateral to underwrite trust. Include redeploy-or-release SLAs, a funded re-skilling budget, and clear acceptance criteria for where AI stops and people start.

Tie exec comp to keeping those promises. Employees get insured transitions and visible ladders. Customers get brand leadership and fewer surprises.

If trust is the risk, underwrite it. Then, and only then, let the models in.

Opportunity Area 2: Gates for Machines

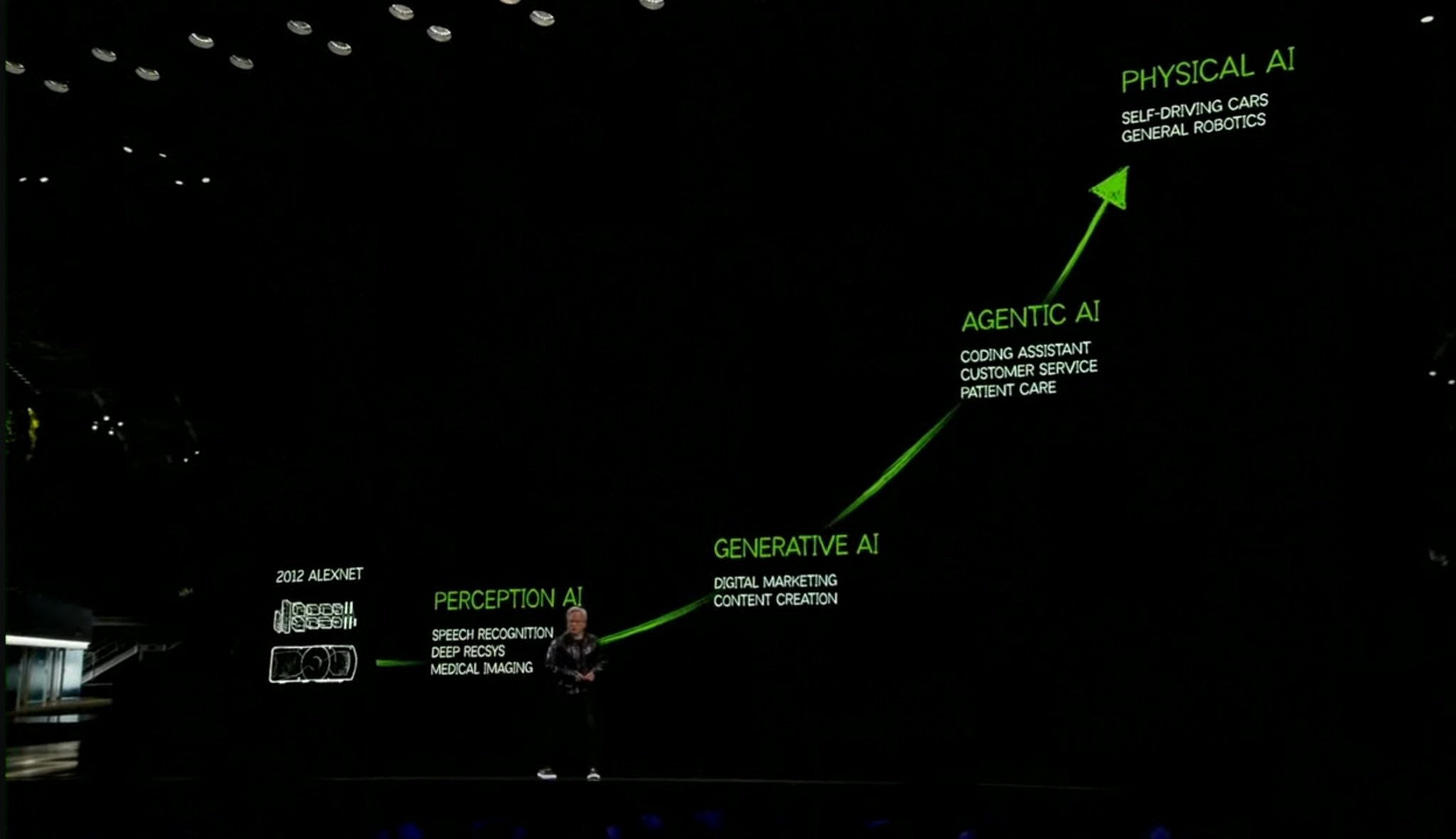

Moravec’s paradox is the rudest party trick in computer science. It’s why AI makes art while humans apply underlayment: skills that appear effortless (physical labor) are difficult to reverse-engineer, while skills that appear effortful (cognitive processing) may not be difficult to engineer at all. The frontier of capabilities is jagged, so replacement rarely happens in one clean cut.

And while we might not get a single “replacement moment” for your job or mine, we’re already swapping out the planks. If an LLM takes one plank at a time (say: content creation for AR/PR, product launches, and sales enablement), the job keeps going with wind behind our sails: more velocity, less effort. If it eats the whole ship (say: aligning stakeholders across R&D and GTM, budget accountability to Finance, organizational change management from C- to IC2), we watch the ship sail past the horizon and wonder why we’re in unfamiliar waters.

People say diffusion of new tech is slow because (a) regulators and (b) curmudgeons. Sometimes. People change takes time, this is true. But a lot of times, diffusion is slow because the real world is messy. In fact, the real world is built entirely of edge cases!

When diffusion lags or scaling plateaus, it’s often because the edge cases comprise the bulk of that atmospheric layer, not the exception. Imagine you’re in charge of an enterprise rollout that needs to be fully automated. After studying hundreds of use cases and conferring with dozens of experts, you design a single “happy path” with a handful of manually-handled edge cases. Within hours of launch, you uncover many more new, creative, previously unimagined(!!) ways to fall off the happy path than you find customers who successfully complete your flow. This is a feature (not a flaw) of testing on the real world: where things break down is where product, eng, and UX design can convert edges into paved lanes. It’s also where judgement is needed most.

The product pitch says: “friction-less onboarding”. Customer legal says: “DPA, SOC 2, DPIA, data residency, SSO, SCIM, and hold on: who is signing the MSA?” The real world is a box of exceptions with some rules we sprinkled on top.3

How I’m approaching this domain at work:

Resolve each new edge case once. Pave it the next time. Use exception-handling as opportunity to surface product gaps, not a ticket queue.

Expect to build an exception factory. Instrument fall-offs, contain them with the lightest viable human touch, productize solutions for repeat offenders.

After a few iterations of this, automation is no longer a promise but the cumulative result of what we’ve built after we productized what used to break it.

A single happy path is a marketing dream. Real work lives in the edge cases.

Opportunity Area 3: Scoreboard for Judgement

When we say “your job will be augmented, not replaced,” we should be more specific.

Augmented what? If we see a job as a list of verbs, we miss the nouns that make it coherent: relational work, judgement, accountability (the buck stops with you).

Accountable for what? Make evaluation central to the org, not an artifact of a post-mortem. If an agent does something consequential, a human signs the retro.

Will there be pain? There is always a bill. Progress just itemizes it differently every decade. Our job is to make sure the scoring rubric matches what matters: judgment under uncertainty when numbers alone are incomplete.

We can’t teach sound judgement by teaching to the test. We can, however, make the test care about judgement. If last decade’s surprise was that social media optimized itself into a hedonism machine, this decade’s surprise is that work may too. I love

’s thinking here — though the full piece is essential reading:

Social media initially fed us lots of new information, based on basic preferences like “this person is my friend.”

In direct and indirect ways—by liking stuff, by abandoning old apps and using new ones—we told social media companies what information we preferred, and the system responded. It wasn’t manipulative or misaligned, exactly; it was simply giving us more of what we ordered.

The industry refined itself with devastating precision. The algorithms got more discerning. The products got easier to use, and asked less of us. The experiences became emotionally seductive. The medium transformed from text to pictures to videos to short-form phone-optimized swipeable autoplaying videos. We responded by using more and more and more of it.

And now, we have TikTok: The sharp edge of the evolutionary tree; the final product of a trillion-dollar lab experiment; the culmination of a million A/B tests. There was no enlightenment; there was a hedonistic experience machine.

The market will tune models to whatever maximizes long-term user engagement.

Maybe that’s speed, or maybe that’s being a little sycophantic here and there. Maybe that’s fewer jobs for new grads because the most cost-effective competence lives in RAM. But if we don’t re-architect around evaluation, exception-handling, and judgement, we may wake up to organizations that run autonomously and produce work no one but AI is positioned to own. Informed policy is needed, too. But “slow down” is a blunt instrument to stave off progress when the better lever is “reshape.”

So let’s keep going.

Opportunity Area 4: Exchange Rate for Credibility

In finance, mark-to-market (MTM) is the practice of reporting our position or portfolio value in the present tense: pricing things at what they’re worth today, not what we paid in the past or hope they’ll fetch in the future. It’s standard accounting for futures and derivatives trading, where positions are adjusted daily to meet margin. This forced, day-by-day repricing drags losses into the light, prevents paper gains from masking risk, and keeps the balance sheet honest.45

I propose we observe the same standard across AI labs and organizational rollouts:

Report what’s actually possible today, on real-world data and edge cases. Show what’s shipped, what’s used, what broke, and what was fixed.

Name which decisions were owned, which exceptions were retired: by who, and why? Identify and address risk before we forge ahead.

Measure institutions by their exchange rate for credibility, not their appetite for hype (sorry, Cluely). Exercise personal judgement when numbers are noisy.

Do this so the system can’t covertly optimize for attention, sycophancy, or misaligned reward. Do this so humans stay in the loop. Do this so we optimize for the part we intend to keep: evaluation, responsibility, and the kind of judgment that makes tomorrow sturdier than today.

🌱 With thanks to Stefan, Elisabeth, Melody, Arthur, and Nico for sharpening my thinking.

27% of Americans have skipped some medical care due to cost concerns (Federal Reserve).

Can we please prioritize automating this part of the job?

Case in point: The org chart is never the real org chart. The map looks like little boxes in Workday, but the territory behaves like a Neopian Bazaar.

Besides a brief stint as an analyst, I can’t explain why I’m drawn to financial history and communist history alike. Perhaps the Western world’s genre of capitalist history?